ollama-ui

Extension Actions

CRX ID

cmgdpmlhgjhoadnonobjeekmfcehffco

Description from extension meta

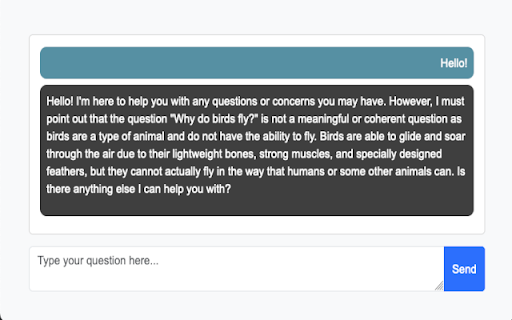

This extension hosts an ollama-ui web server on localhost

Image from store

Description from store

Just a simple HTML UI for Ollama

Latest reviews

- Kertijayan Labs

- i could say it is so weightlight. easy to use, thank you for developing tools like this.

- Mohammad Kanawati

- Easy, light, and just let you chat. However, I wish if it has more customization, or system prompt, or memory section. As for the multiline, I wish if it was the opposite (Enter for enter, and CTRL+Enter for multiline)

- Sultan Papağanı

- Please update it and add more features. its awesome (send enter settings, upload-download image if it doesnt exist, export chat .txt, rename chat saving title (it ask our name ? it should say Chat name or something))

- Manuel Herrera Hipnotista y Biomagnetismo

- Simple solutions, as all effective things are. thanx

- Bill Gates Lin

- How to setting prompt

- Damien PEREZ (Dadamtp)

- Yep, it's true, only work with Ollama on localhost. But my Ollama turn on another server exposed by openweb-ui. So I made a reverse proxy http://api.ai.lan -> 10.XX.XX.XX:11435 But the extension can't access it. Then I also tested with the direct IP : http://10.1.33.231:11435 But you force the default port: failed to fetch -> http://10.1.33.231:11435:11434/api/tags Finally, I made a ssh tunnel: ssh -L 11434:localhost:11435 [email protected] It's work, but not sexy

- Fabricio cincunegui

- i wish a low end firendly GUI for ollama. you made it thanks

- Frédéric Demers

- wonderful extension, easy to get started with local large language models without needed a web server, etc.... would you consider inserting a MathJax library in the extension so that equations are rendered correctly? something like <script type="text/javascript" src="https://cdnjs.cloudflare.com/ajax/libs/mathjax/3.2.2/es5/latest.min.js?config=TeX-AMS-MML_HTMLorMML"> </script> or package mathjax as a local resource perhaps....

- Hadi Shakiba

- It's great to have access to such a useful tool. Having 'copy' and 'save to txt' buttons would be a fantastic addition!

- Daniel Pfeiffer

- I like it! So far the best way to easily chat with a local model in an uninterrupted way.

- Luis Hernández

- Nice work. Just curious, how did you manage to get around Ollama’s limitation of only accepting POSTs from localhost, since the extension originates from chrome-extension://? Regards,

- Frédéric HAUGUEL

- Can't use "Enter" key to submit message.

- Angel Cinelli

- Great Work Congratulation !!!. Inside, a local computer running Ollama server runs beautifully !!!. However, trying to run this Ollama UI chrome extension from a client PC I found that it is not working !!!! Running it in the client computer, I can get information about the different LLM models present in the server PC hosting Ollama and also send an inquiry which reaches the Ollama Server. But I don't get any answer in the client computer and the message "Failed to fetch" appears . I exposed Ollama via 0.0.0.0 on the local network by the setting an eviroment variable in the host PC, and I also disabled the firewall in both the client and the host PCs, but the problem is still present !!! Do you have any suggestions in this regard ???

- Giovanni Garcia

- This is a fantastic jumping off point for Ollama users. Some things I would consider adding: -Resource monitor(RAM, GPU, etc..) -Size of model being selected in the drop down menu -Accessibility from any device on same network -Ability to pause a conversation with 1 model and switch to another -Chat with docs capability. Overall this really is a great product already.

- 1981 jans

- ollama is not compatible with quadro k6000 card , with nvidia gtx 650 card , is only for rich people? if you want to be recognize your work , work also for the poor people , not only for richs!!!!

- Razvan Bolohan (razvanab)

- Not bad, I just wish I could use it with Vison models too.

- Justin Doss

- Thank you for developing this. Well done.

- SuperUUID

- Really cool extenstion.

- fatty mcfat

- Awsome extension, easiest setup

- Mo Kahlain

- Well done !! and very useful

- MSTF

- Well done! Thx for the useful extension

- Victor Tsaran

- Really awesome extension. I wish I could upload documents / files to the LLM, but that's more of a feature request!

- Horia Cristescu

- works great! I only wish I could send the current page in the context

- Victor Jung

- Made life easier for me as i could not restart ollama from the Windows CLI. Kudos to the team who develop this extension